In our previous article, we discuss how any development team can use tools/software available in the market to analyse the source code of the open-source component use by product/application/service. Today, all software development models in the industry push security testing/security validation to the retrospect phase. By this time feature development is over and the final application is ready for testing. Potential attack vectors in the retrospect phase require additional development effort or rigorous testing due to the open-source library. Testing is required sometimes to determine the impact and severity of the uncovered vulnerability. This additional testing is done to ensure that the vulnerability cannot be exploited by an attacker in the production system.

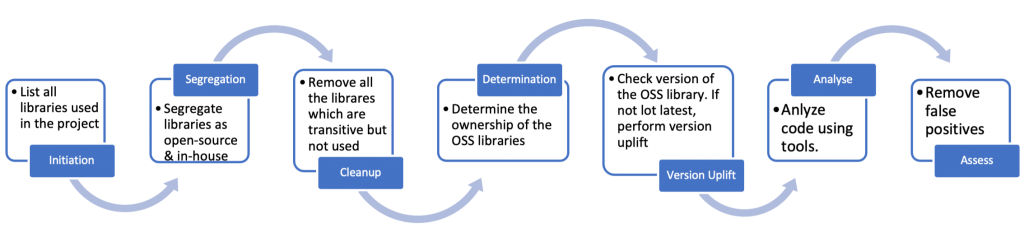

The above problem can be avoided by following a proper Risk Assessment & Evaluation Process. The open-source either add vulnerabilities directly to the project or because of improper by the development team. Either way, the unknown vulnerabilities possess a great threat as they can compromise any of the CIA (Confidentiality/Integrity/Availability) triad. The below practice is developed and verified in our project. This practice can provide step-by-step guidance to any development for handling open-source unknown vulnerabilities.

- Initiation Step: As we know any product development uses many in-house libraries and OSS libraries. The development team should first create a bill of material (BOM) for all the libraries used in their project. This inventory list can also be useful while doing Patch Management for any library used in the product.

| This can be as easy as running npm list -json for Node.js or maven dependency:tree for your Maven project. |

- Segregation Step: Now we have the inventory of libraries available. The development team should segregate in-house and OSS dependencies separately. This is due diligence as customers may require a list of open-source components to avoid any licensing or compliance issues.

- Clean-up Step (Optional): Some OSS dependencies are not shipped as a single library but shipped as a framework that needs some other dependencies, these dependencies are called Transitive Dependencies. For example, when you add Spring Boot dependency in any Java project it adds many other transitive dependencies. Many a time these transitive dependencies are not used in the product. Hence, the product teams are suggested to exclude them. This reduces BOM entries and attack surfaces for framework vulnerabilities due to the transitive dependencies. The steps to perform such a job is described in our previous article.

- Responsibility determination: In most cases, the stamp of “In-House Development” on a component is a shared responsibility. With this all-vital distinction, we can differentiate between three variants of dependency chains (and their corresponding handling strategies)

- Direct dependencies from one of the project’s components: It’s the responsibility of the given project to continue the process regarding these (or better, do so in cooperation with other projects having the same dependencies!)

- Dependency graphs involving at least one in-house component and at least one project component: Welcome to the grey zone! You can ideally work together on the next points with the development teams behind the other internal team who owns the components.

- Dependency graphs involving only in-house components: The case where the project may lean back and wait to be notified by the internal development teams behind the in-house components on whether there is anything that needs to be kept in mind when using these components

- Direct dependencies from one of the project’s components: It’s the responsibility of the given project to continue the process regarding these (or better, do so in cooperation with other projects having the same dependencies!)

- Version Uplift: The development teams do not always use the latest version of the OSS library either due to lack of knowledge or because of features available in some specific version. Development teams should choose a target version to share analysis efforts that considers the age and complexity of the different potential OSS component versions. They should be shared in concert with as many other teams as possible. Check known vulnerabilities against the respective OSS library versions, even if no vulnerability is reported against the version. Use the latest version of the library to minimize the chance of injecting any unknown vulnerability via the OSS library.

- Analyse: Once the final list of all OSS libraries with the latest and greatest version is available, the source should at minimum be scanned with tools like Fortify/CheckMarx for static code analysis and then based on the risk and delivery mode with dynamic code scan tools like ZAP/Burp etc. Sometimes scanning the whole project with libraries as big as Spring Boot (Java), Hibernate (Java) or Express (NodeJS) is impossible due to the number of lines these libraries have. In those cases, it’s advisable to scan these libraries alone to uncover some pitfalls left in the source code by the development team of the OSS library (plus, if we were to use tools, like Fortify, we could even produce sets of Rules for components, that would allow cross-component tracking of data flows…)

- Assess: As described in the above step some libraries are hard to scan with the actual product code. Reports generated by the tools should be validated by the security experts to remove false positive scenarios and uncover other scenarios from improper use of components by product developers. This assures management and architecture teams that the libraries follow security best practices.

Security is an evolving process. The tool covers all the scenarios to remove the potential security vulnerabilities. This makes the product team responsible for issues or vulnerabilities injected by the OSS libraries. OSS libraries can’t be neglected as otherwise, to avoid overburdening the development teams to rewrite the whole code. Hence, the security teams and the development teams should work in close collaboration mode. So that any potential vulnerability can either be removed or can be put under a code to reduce the attack surface.

Sagar is a Senior Developer & Product Security Lead with SAP Labs based out of Bangalore, Karnataka. He is a CISSP certified, certified cloud computing architect, Blockchain Community Certified Architect and database solution advisor with over 10+ years of experience in secure software designing and cross platform architectural designing. He had published many papers in international journals like IEEE, Springer etc. on cross-domain communication and interoperability for cutting-edge solutions