Legislation to punish social media platforms is misdirected. The dynamic of the legislation is to balance between free speech and curbing the flow of disinformation. Those mechanisms already exist within the platforms but using them impedes profitability. While all the platforms are guilty of this negligence, Facebook is the poster child for the problem. Cyperprotection Magazine conducted two experiments in the past month and the results formed our position.

The Facebook community standards state, that “In an effort to prevent and disrupt offline harm and copycat behavior, we prohibit people from facilitating, organizing, promoting, or admitting to certain criminal or harmful activities targeted at people, businesses, property or animals.” Facebook further claims to invest in “technology, processes, and people to help us act quickly.”

In one experiment over the course of 24 hours, we identified five Facebook users who consistently violated multiple user requirements, advocated for the overthrow of governments, threatened civil violence, and disseminated dangerously false information. These violations dated back more than a decade without a single action taken against their participation on the platform. When we reported this to Facebook, within hours the response was that they did not find any of these users had done anything worthy of discipline.

To its credit, Facebook did remove single offending comments from a particular string tied to a post by a prominent politician, but rejected any discipline that would have removed the offenders from the platform, even those with a history of sharing disinformation and harrassing other users.

Security be damned

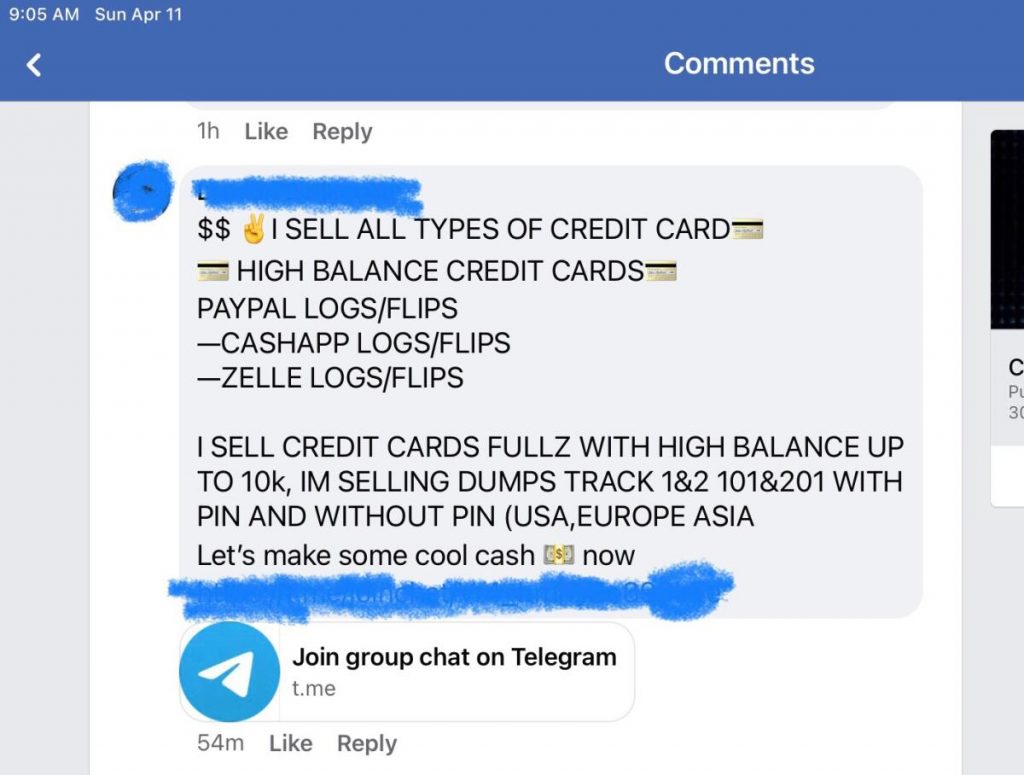

Our concurrent experiment focused on our core competency at Cyberprotection Magazine: security. We joined two cybersecurity groups and found post after post from black-hat hacking services, offering stolen passwords, social security numbers and other illegally obtained tools and information. There were also legitimate offers of security certification training, but also offers of employment from unnamed nation-states.

Facebook puts all of the responsibility for monitoring these kind of infractions on group administrators. In these two cases, the administrators issued disclaimers on that responsibility. One of them stated the reason the page existed was, “because current surveys shows that mostly people want to hack social media accounts as Facebook, WhatsApp, Instagram, Twitter and more. We are not responsible for any fraud.”

It is possible to report group members to the admin, but not for illegal activity. That is an option only for reporting individuals on personal pages. One might think that there is an algorithm that would be able to find these violations, but in one month of investigation we found no evidence that Facebook is actively shutting down illegal operations.

At the 2019 Techno Security & Digital Forensics Conference, a senior executive from RSA demonstrated how to buy active credit card numbers over Instagram using just a few search terms. Many of the same offers are still available. Instagram is a subsidiary of Facebook, which has done nothing to stop that activity. Based on our research, 1,000 stolen credit card numbers can be purchased for $6.

We were not surprised. There is one reason why social media platforms do not stop violations of their own standards: Profit. The more traffic is generated, the more valuable their advertising services and user data. They have no financial interest in stopping illegal activity because it drives traffic and advertising.

Social media does provide a valuable service to most people. Connecting friends and families, sharing information and reasoned debate on issues make a stronger society. The industry likes to claim they protect free speech and privacy but disinformation, slander and crime are not protected speech. The recent Facebook data breaches (including Mark Zuckerberg’s personal information), with most of the data available on the dark web, shows that privacy is definitely not a concern. Rather than debate those issues, legislators should focus on the ability of the platforms to enforce their platform standards.

Criminal activity ignored

We are calling for a law similar to “truth in advertising.” If a platform cannot effectively protect their users, they need to state that unequivocally. If they insist they can, they need to demonstrate that ability better than they are doing it now.

For example, in the most recent hack of more than 500 million users, Facebook was told by government researchers in Belgium, Canada and Ireland that the contact import feature was vulnerable going back to 2012. Legislation should require platforms, once informed of vulnerabilities, to fix or remove features that violate user privacy. Should they fail to do so in a timely manner the platform should have to pay a fine of, say, $50 per user occurrence. That would make the total fines for the current violation more than $5 billion. Similar fines could be levied for any content that violates public platform standards.

The major platforms (Facebook, Twitter, and Linkedin) could simply abandon the pretext of protecting users from breaches and harassment. Gab and Parler make no such claims and their users seem to prefer it that way. Retreating from protecting users, however, would probably cause a mass exodus of users and tank profitability. Until then, legislators don’t need to worry about hard-to-define concepts like what speech is protected and how much privacy is enough. They just need to hold the platforms to their word.

Lou Covey is the Chief Editor for Cyber Protection Magazine. In 50 years as a journalist he covered American politics, education, religious history, women’s fashion, music, marketing technology, renewable energy, semiconductors, avionics. He is currently focused on cybersecurity and artificial intelligence. He published a book on renewable energy policy in 2020 and is writing a second one on technology aptitude. He hosts the Crucial Tech podcast.

Fines will become a simple “cost of doing business” like we’ve seen in other industries. Thy should be fully liable – both civilly and criminally, as applicable – for what they publish. That should apply whether their “community standards” allow the content, or their lax enforcement practices let it “slip through.”

Fines would be useful, but opening them up to civil and criminal liability is bigger stick.

I note that a lawsuit has been filed against Facebook in the EU for violation of GDPR protections. Could end up costing billions